The Fuzzy Suite

building blocks for a semantic runtime

Published Mar 12, 2024

One of the most interesting and imo still very underexplored things about language models is that they give computers the ability to interact with semantic content (yes I know about sentiment analysis, I mean in general) instead of just “data”.

Because anything we (people) do can be described with language, this opens up the possibility for computers engage with the things we do. For example, as I navigate around an interface, each mouse movement, click, text input,

This post isn’t about that though

This post is about a fun and totally unrelated experiment I made, called the fuzzy suite. The fuzzy suite is composed of fuzzy_if, fuzzy_filter, and fuzzy_foreach. They help you by creating an interface between the fuzzy world of language and people and ideas and the slightly more rigid one of computers. You can check it out on

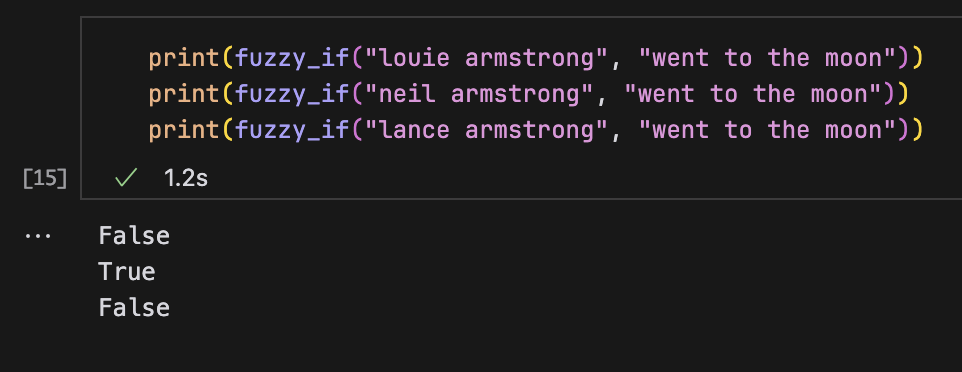

fuzzy_if

Fuzzy_if lets you know if something is something else. It works like this:

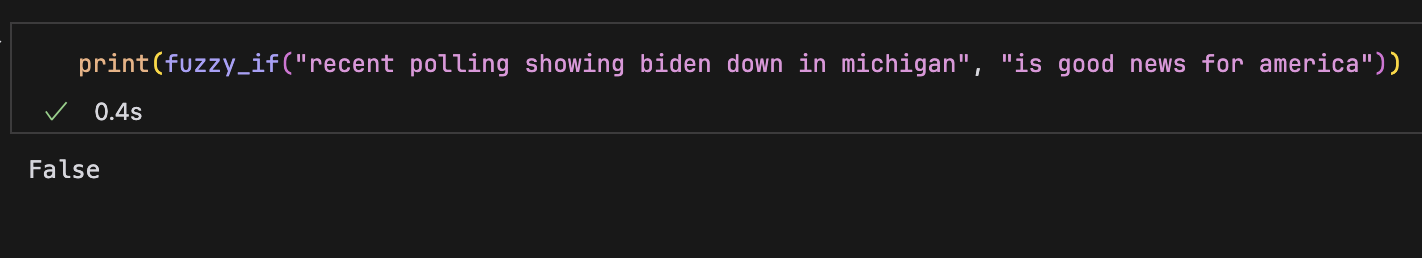

It can also do political analysis! Oh no!

Let’s move on to fuzzy filter.

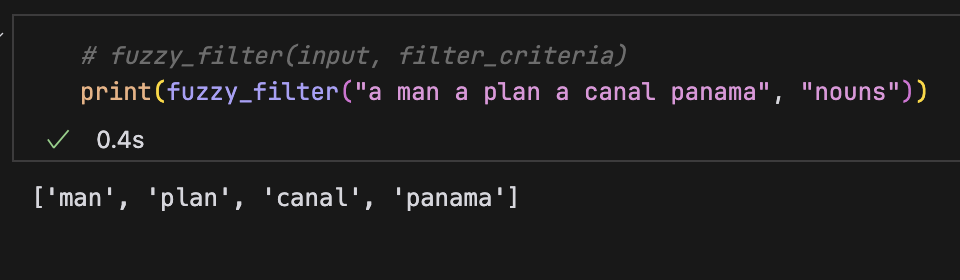

fuzzy_filter

This one lets you filter strings however you like! Here’s an example:

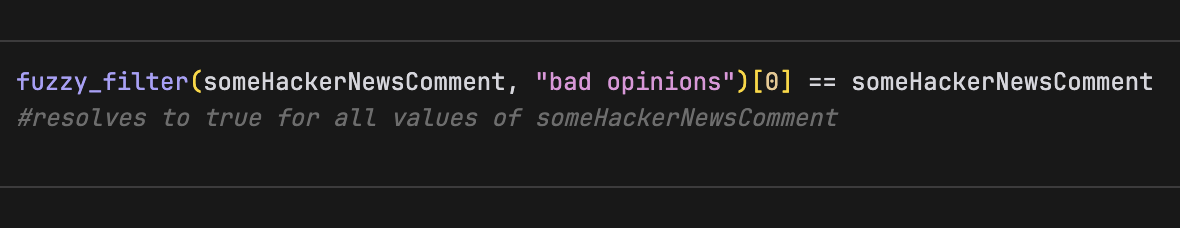

Here’s another!

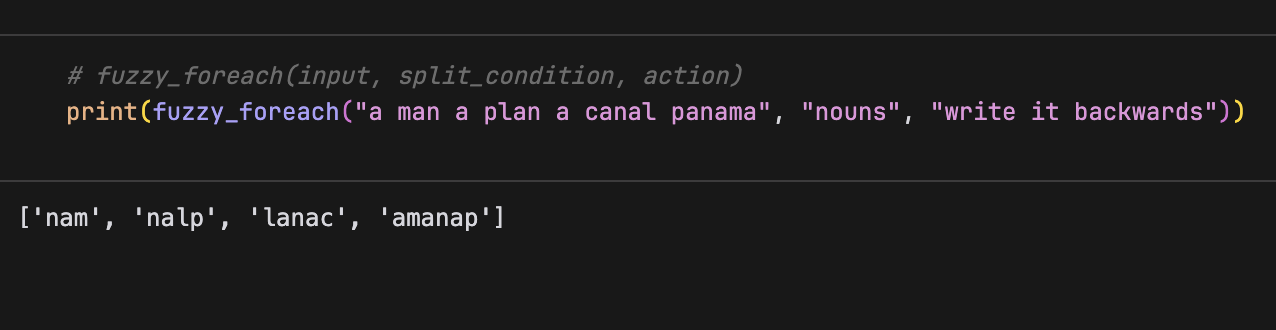

fuzzy_foreach

fuzzy_foreach uses fuzzy_filter to break a string into substrings, then performs some specified action on each substring, like this.

okay why

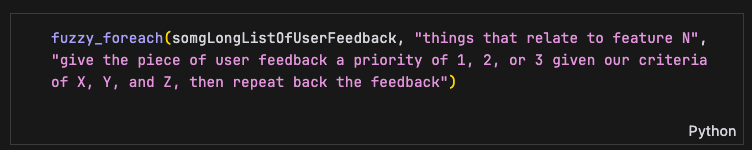

Good question! Imagine a purely hypothetical situation in which you have a lot of user feedback on something you’ve built and you want to categorize it. You could do something along these lines:

Sorry Kraftful! Lunch eaten! open source wins again 😎

okay actually why

I honestly haven’t taken a lot of time to really push this idea to its limits, but I would love to see someone do that! which is partially why I’m posting this. I do generally think that something like this probably requires a more semantically fluent runtime in an app in order to actually be used to its full effectiveness. Otherwise you’re stuck working with text that represents content (for lack of a better way of phrasing it), like reviews or feedback or messages, instead of text that represents actions.

I think a more full, and interesting, implementation of this would be in some kind of application runtime, where your actions and preferences and outside context and state get pushed through chains of these things, helping the system decide which modes to activate and buttons to show and copilot-esque actions to ghost-do for you.

goodbye

This is actually from